We are proud to announce that Scailable has been acquired by Network Optix.

For the announcement on the Network Optix website, move here.

The Scailable Middleware.

Enabling effortless edge AI: Deploy, manage, and scale your edge AI solution using Scailable.

High-performance AI on selected hardware

Our edge AI pipeline is remotely configurable, so you can effortlessly deploy your AI models to the edge. We will automatically ensure that you reap the fruits of the latest available AI accelerators. No more recoding for your target hardware: deploy your edge AI solution within minutes.

Using Scailable, you can focus on the application, while ensure highly performant and modular execution of your AI/ML models on the edge.

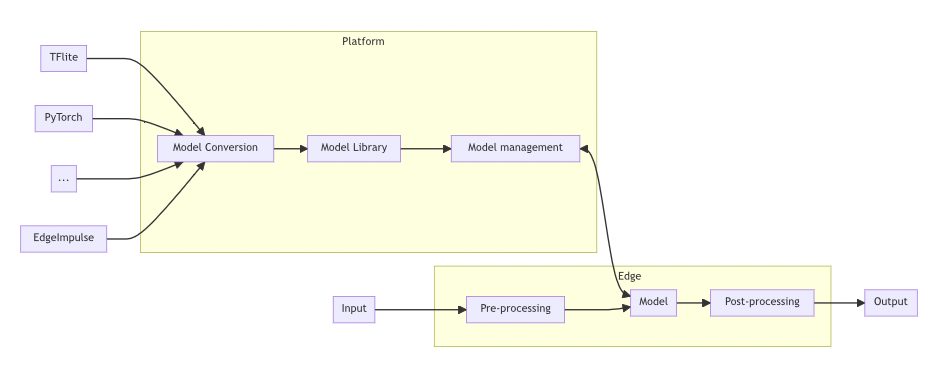

What is Edge AI middleware?

Think about it, what steps are needed in any edge AI project?

- An AI/ML model, created by a data scientist using their favorite modeling platform.

- Edge hardware, on which the full pipeline, from sensor to inference, needs to be developed.

- The higher level application platform to ensure your business value.

Without appropriate software tools, these steps needlessly interact, model and pipeline deployment depend on the target hardware, and you time to market suffers.

When you start building your first edge AI solution you might think that it’s possible to move a container with your AI model to a large edge device, writing some glue code to access the sensors, and writing API integrations (or otherwise) to power your application. Great. But what if you want to do this on an edge device to hundreds of devices? And what if you don’t want to spend time setting up the pipeline on every device, let alone rebuilding it when your hardware changes.

This is where you need edge AI middleware; the Scailable middleware ensures that you can use your favorite AI training platform to create your models, and you can deploy effortlessly, remotely, securely, and in bulk to a large range of selected edge devices. Instantly. You can remotely configure the whole pipeline, without any code. You can even setup your device to capture new training examples in context such that you can close your AI training loop.

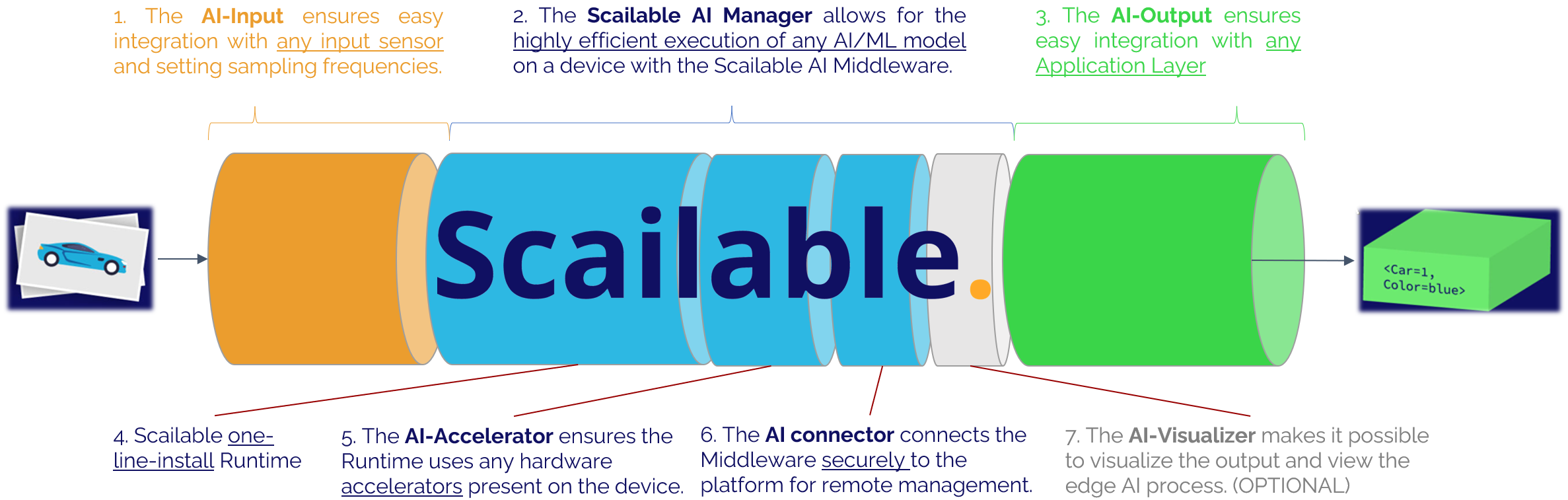

AI middleware: Efficient, modular, edge AI pipelines on any device

Pipelines, pipelines, pipelines

Our edge AI middleware is best visualized as a pipeline. In a modular fashion, we have input processing. Next, we run your model in a runtime that is specifically suited for the device, gaining the benefits of the available chipset. The connector ensure your device pipeline can be configured remotely (OTA), and the visualizer ensure (on selected devices) that you can see your AI model in action. The output layers enable easy integration with higher level application platforms, inference distribution using a variety of protocols, and the ability to store input examples and inferences for re-training.

Our pipelines are available for a variety of supported devices and supported inputs and output. For experienced AI developers, our full SDK is available to tailor the Scailable pipeline to your own needs.

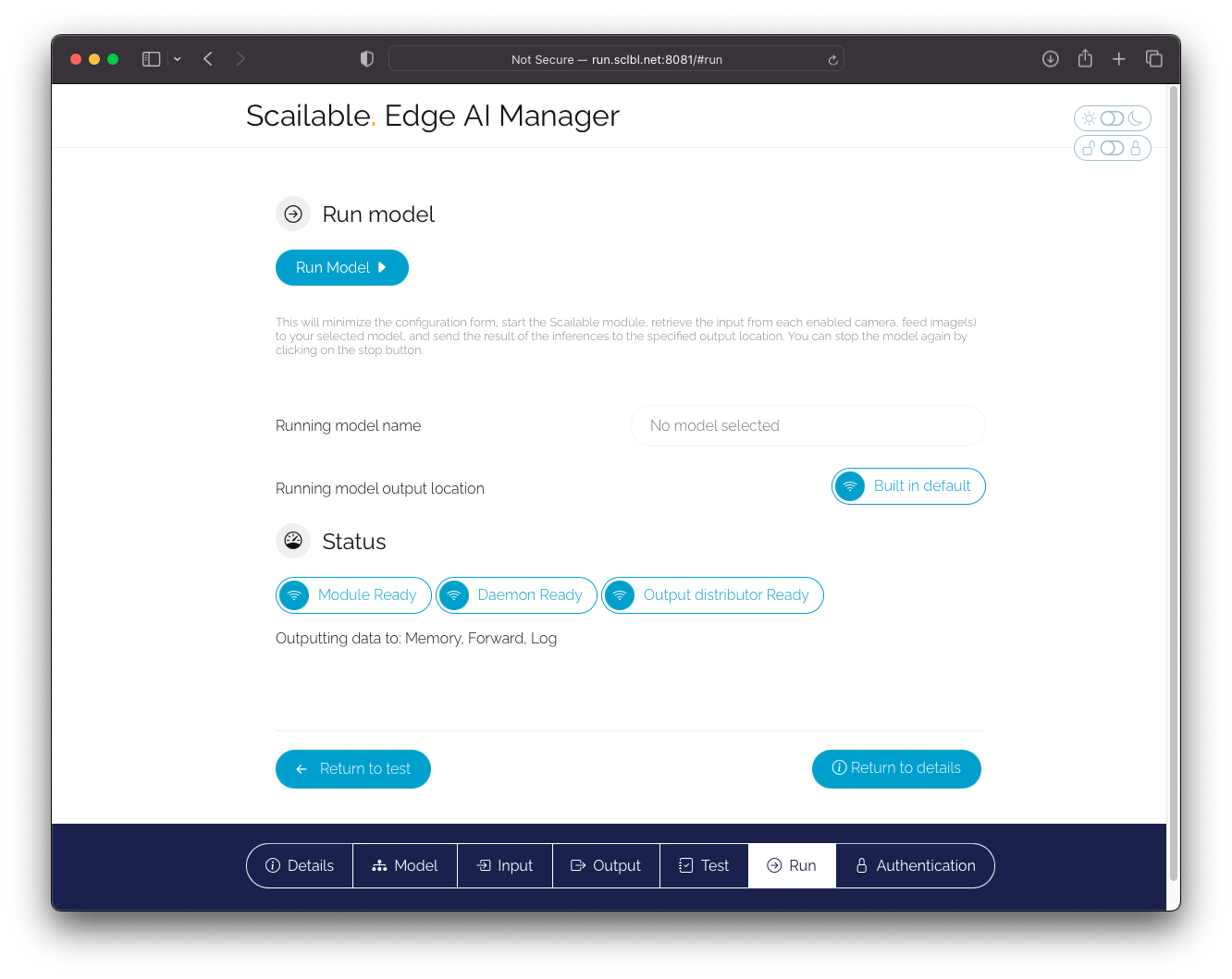

Edge AI remote management

The Scailable platform allows you to effortlessly manage edge AI applications using Scailable middleware. You can easily access your AI library models and devices, and manage them with OTA (over-the-air) deployment.

The Scailable platform allows you to (mass) deploy AI/ML models to target edge devices without the need for any on-device engineering. Effectively you can “swap” models that run on an edge device with the Scailable middleware installed and remotely change the full pipeline configuration. All of this you can do securely, and at scale: you can flexibly group devices and deploy models to groups of devices. Finally, through our advanced platform API, you can integrate the Scailable platform directly into your CI/CD pipelines or create your own AI model management interface on top of the Scailable platform.

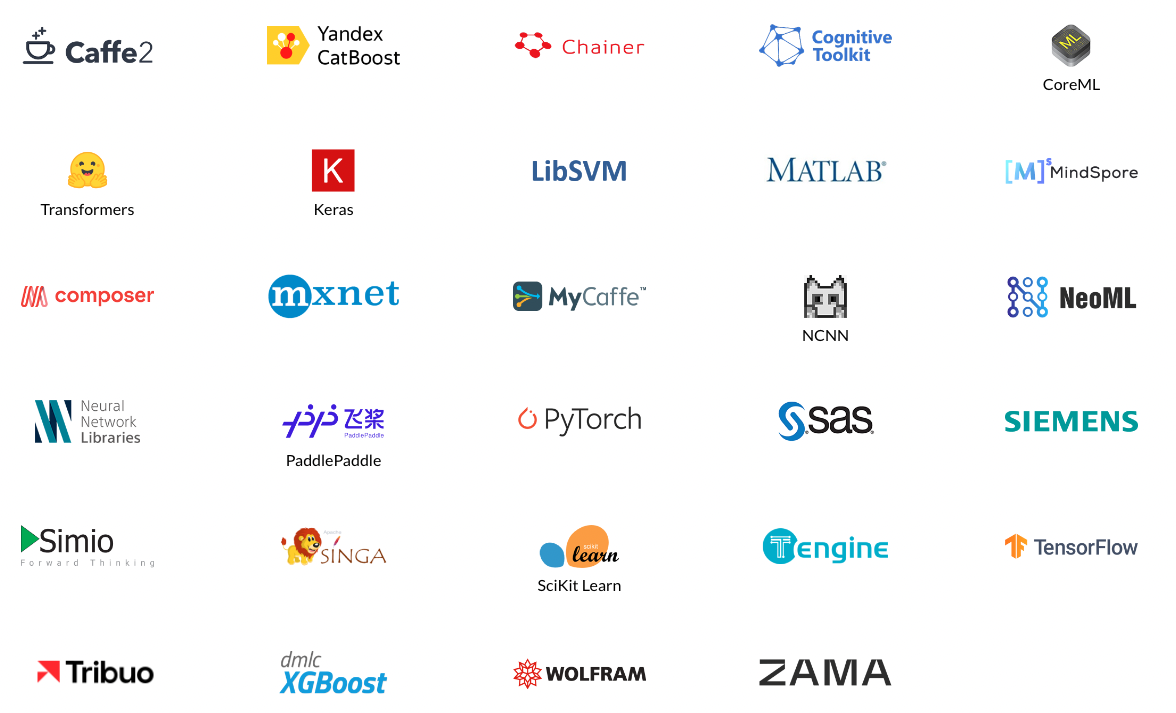

Import models from virtually any training platform.

We provide the ability to effortlessly import models trained in virtually any AI/ML training platform out there. We integrate with multiple training tools to make it as simple as possible to create highly performant edge AI solutions.

Take a look at our documentation to see how you can import models using ONNX, TensorFlow, PyTorch, TeachableMachine, or Edge Impulse.

Highly performant on selected devices.

The AI manager comes pre-installed on selected devices saving you valuable setup and configuration time and ensuring that you can roll out your edge AI solution at scale within minutes.

Would you like your hardware to be supported? Please do contact us.

Get started.

Getting started is easy, and we will provide support from start to end. Read more about our support packages and licensing here.